The Quick Rundown:

Cartesia is building real-time, on-device intelligence across multiple modalities via SSMs – an architecture they pioneered that solves the quadratic scaling bottleneck of transformers.

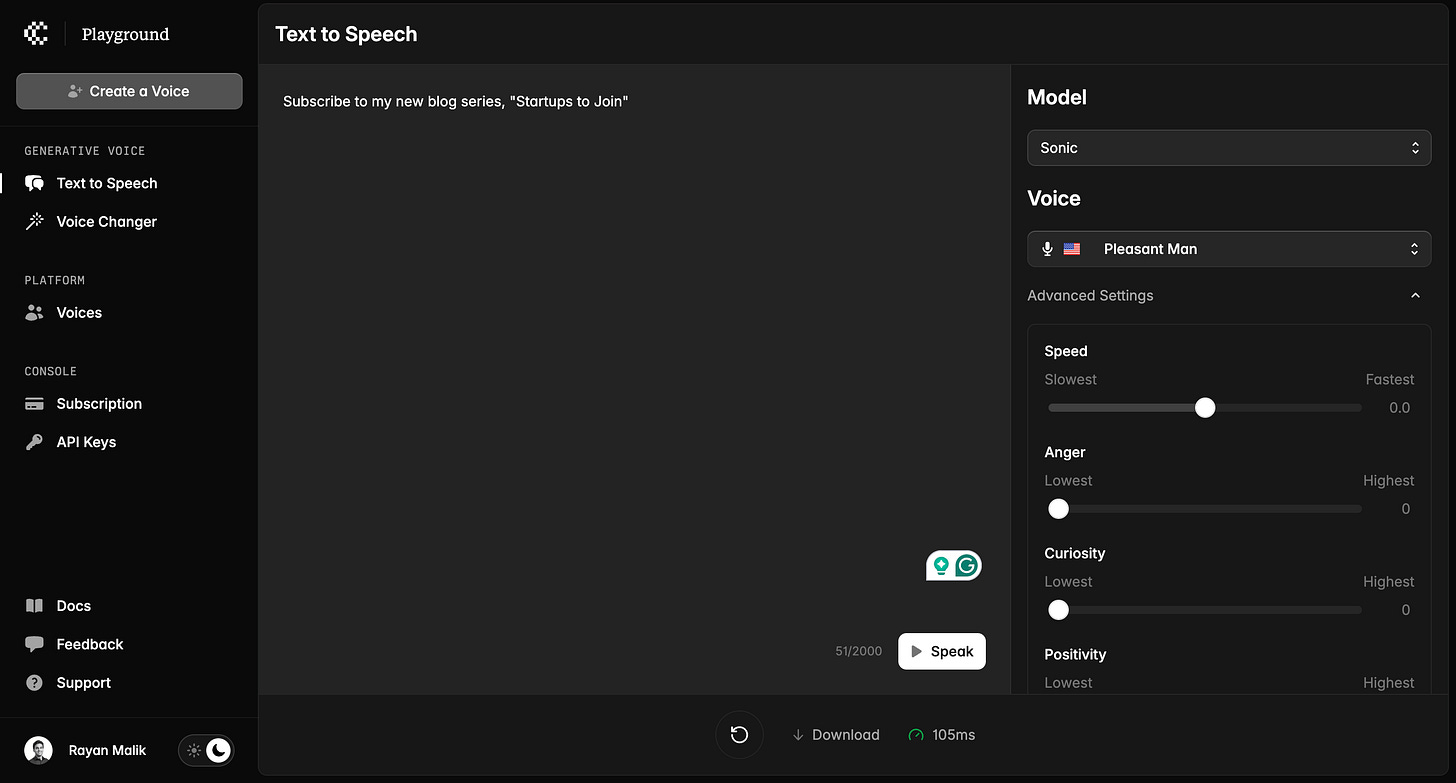

They are starting with audio generation and their first product is Sonic, the world’s fastest generative voice API with a latency of just 90ms.

They’ve raised $27M in funding led by Index Ventures with participation from Lightspeed, Factory, Conviction, General Catalyst, A*, SV Angel, and 90 angel investors.

They’re actively hiring for 20 roles, including AI researchers, engineers, designers, product growth, and sales.

This is the second edition of “Startups to Join” – a blog for engineers and designers to discover early-stage (pre-seed through Series A) startups that are reimagining industries and on the path to becoming enduring companies.

Every week, I review hundreds of startups and spend hours talking to VCs and founders to write about one company that’s building something special. Whether you’re looking for the next unicorn to join or just curious about what top VCs are investing in, subscribe below to keep updated:

Is Attention All We Need?

Before the world was introduced to transformers in 2017, the majority of AI models, particularly for sequence-related tasks, were powered by architectures like RNNs and LSTMs. These models enabled many important breakthroughs because they were the first architectures to effectively process sequential data by maintaining an internal memory state. This meant they could consider previous context when processing each new input, making them suited for tasks where immediate context matters most.

Yet, these architectures had two fundamental limitations:

First, they struggled with long-range dependencies due to the vanishing gradient problem. During training, as gradients flowed backward through the sequence, they would become exponentially smaller, making it difficult for the model to learn connections between distant elements. This meant that while an LSTM might easily remember context from a few steps ago, it would struggle to connect information across longer input sequences, such as understanding how the beginning of a paragraph relates to its end.

Second, these architectures are inherently sequential – processing information one step at a time, with each step depending on the completion of all previous steps. This created a computational bottleneck: processing a 100-word document required 100 sequential operations that couldn't be parallelized (i.e. processed concurrently). As most practical AI tasks demand processing longer sequences and training on larger datasets, this posed a significant constraint on the scale of these architectures.

As a result, while these architectures could handle tasks requiring short-term dependencies, they struggled with anything that required broader context comprehension, like understanding entire documents or maintaining consistency in long-form text generation. These limitations severely restricted the practical applications of RNNs and LSTMs.

In 2017, the transformer – a new architecture developed by 8 Google researchers – was introduced to solve these limitations. At its core, transformers introduced an attention layer that allows a model to assign importance (attention) to every part of the input sequence simultaneously, regardless of their distance from one another. The self-attention mechanism computes how much each word (or token) in a sequence relates to every other word. For instance, consider the sentence: "Substack is my favorite platform to publish writing". While an RNN would need to pass information through each word one step at a time, a transformer can directly link "platform" back to "Substack" in a single step.

Additionally, unlike RNNs and LSTMs, which process tokens sequentially, transformers use parallel processing in their attention mechanism. This means that instead of analyzing one token at a time, the model can compute relationships between all tokens in a single step, leveraging matrix operations optimized for GPUs and TPUs. This parallelism addresses both of the fundamental limitations of RNNs: it speeds up computation through parallel processing, while also enabling the model to capture global dependencies.

Today, nearly every sequence-modeling AI application we interact with, from LLMs like ChatGPT to coding assistants like Cursor, rely on the transformer architecture. Yet, if you’ve spent a lot of time using any of these tools, you’ve likely noticed that these models struggle to remember context from the input you provided a while ago.

Why? Because the attention mechanism is computationally expensive. For every new token, a transformer must calculate attention scores with every previous token, leading to quadratic O(N²) computational complexity. Additionally, these models need to store the key and value vectors for each token in a KV cache which grows linearly O(N) with sequence length. This dual burden means that as the input size grows, the cost of computation and memory increases significantly, slowing the model and consuming substantial resources.

For example, because doubling the sequence length quadruples the number of attention calculations, processing a 1-minute audio clip with transformers requires roughly 4 times the compute and memory of a 30-second clip.

Solving this challenge is an area of active research, with techniques like Linear Attention and Sliding Window Attention gaining popularity. Today, most large-scale models use a fixed context window to limit the maximum number of previous tokens considered for attention calculations, reducing computational costs and ensuring models are fast.

As of early 2024, the GPT-4 Turbo model has a context window of 128,000 tokens, which roughly equates to about 100,000 words or 300 pages of text. This may seem substantial, but for applications requiring real-time processing of long sequences, this becomes prohibitively expensive as previous tokens aren’t considered.

This context window also falls significantly short of human intelligence. Each year, humans process, understand, and remember 1 billion text tokens, 10 billion audio tokens, and 1 trillion audio tokens. So, while a context window of 128,000 tokens may suffice for basic LLM use, for tasks like video and audio generation, processing DNA, and AI agents, this quadratic scaling bottleneck is a critical limitation.

Additionally, transformer-based models face deployment challenges. Because of their size and computational requirements, they are typically hosted in compute-heavy data centers and deployed on the cloud via APIs, making on-device deployment impractical for multiple reasons:

Memory Requirements: Large transformer models often require several gigabytes of RAM just to load the model weights as well as high-performance GPUs or TPUs.

Compute Intensity: The quadratic scaling of attention makes real-time processing challenging on device hardware.

Network Dependency: API-based deployment introduces latency and requires constant internet connectivity.

For applications that require real-time interaction, these limitations act as key bottlenecks. Data transfer between a device and the cloud is costly, introduces network latency, and depends on reliable network connectivity. For real-time applications, these factors could cause increased delays and a poor user experience, especially in scenarios where connectivity is poor or unavailable.

In other words, despite all of the breakthroughs that transformers have enabled over the last few years, as we shift our focus from chatbots to other complex tasks like audio generation and AI agents, some people are starting to question whether pure attention-based architectures are always the optimal solution. As a result, researchers have been exploring new architectures that can handle long-range dependencies and also be deployed on-device.

A New Architecture: SSM

One of the new architectures that has shown promising results is state space models (SSMs). Unlike transformers that process and store every token, SSMs adopt a form of fuzzy compression where inputs are compressed into a fixed-size continuous state. In modern SSMs, like Mamba 2, state transitions are input-dependent, allowing the model to selectively retain or discard information based on context.

Since the state size remains constant regardless of sequence length, SSMs achieve near-linear scaling in both computation and memory, compared to transformers' quadratic scaling. Going back to our audio file example, this means processing a 1-hour sequence with an SSM only requires proportionally more resources than a 1-minute sequence, instead of quadratically more.

This computational efficiency means that SSMs are uniquely designed to capture long-range dependencies, making them particularly useful in tasks that require processing extended contexts like video and audio generation. These are the exact tasks that transformers currently struggle with due to their context window.

Yet, there is no such thing as a free lunch: since SSMs compress information into a fixed state rather than storing explicit attention patterns, they aren't as effective at tasks requiring precise recall or exact pattern matching. While a transformer can directly look up specific details from thousands of tokens ago through its attention mechanism, an SSM must rely on the information it has managed to preserve in its state.

I personally view this as more of a feature than a bug – humans operate the same way. SSMs feel intuitively more similar to human intelligence – they remember the bigger picture instead of storing each minor detail.

In practice, this trade-off means that SSMs and transformers have different strengths:

Transformers excel at tasks requiring precise recall and arbitrary access to past information

SSMs excel at tasks requiring efficient processing of long sequences and real-time generation

In addition to its ability to handle long-range dependencies, because SSMs maintain a fixed size state and have linear scaling properties, they can also be run on-device with limited memory and computational resources. This makes them particularly suitable for applications requiring real-time processing without constant network connectivity.

As a result of these key benefits, researchers are beginning to explore whether SSMs will be the path forward for real-time intelligence. And one company in particular – Cartesia – has already made significant progress in this direction and that’s why I’ve chosen to cover them as the second company I highlight in this “Startup to Join” series. It’s also why they’ve raised a $27M seed round led by Index Ventures with participation from Lightspeed, Factory, Conviction, General Catalyst, A*, SV Angel, and 90 angel investors.

Cartesia

Founded by Albert Gu, Karan Goel, Chris Ré, Arjun Desai, and Brandon Yang – the creators of the SSM and early models like S4, Mamba, and Mamba 2 – Cartesia is attempting to enable developers to build and run real-time multimodal experiences on any device.

While that’s a big vision, they’ve already made a strong start with the launch of Sonic, the world’s fastest generative voice API. Since audio generation requires low latency, is highly compressible, and needs long context windows, the SSM architectures that the Cartesia team have been pioneering for the last four years are uniquely suited to this use case.

Today, Sonic is accessible via a web playground and API. Users can generate audio in multiple languages and also clone any voice with just 15 seconds of audio. Users can also customize the tone of the voice, specializing the sound for different use cases.

The team at Cartesia has built an inference stack to provide Sonic at incredibly low latency – 90ms – and high throughput. Alongside being the fastest audio model, Sonic can also be deployed on-device which opens up a lot of interesting use cases. Given this, Sonic is already in production with thousands of customers ranging from individual creators and startups to large enterprises.

One of the reasons Sonic is so effective is that Cartesia “created a new SSM architecture for multi-stream models that continuously reason and generate over multiple data streams of different modalities in parallel”. This means that their system maintains separate state spaces for different modalities (text and audio), connected through a conditioning mechanism. By doing so, they maintain precise control over output while also generating natural speech. And if you test out the product, you’ll immediately notice the difference in Sonic vs other audio generation models. Not only can Sonic handle long transcripts and context, but it can also stay on topic and it rarely hallucinates.

And while their audio model is already state of the art, it’s Cartesia’s broader goal of bringing real-time intelligence across multiple modalities on-device that makes their product roadmap so exciting.

Closing Thoughts + Jobs at Cartesia

SSMs are an incredibly interesting area of AI research right now and there is no better team to work on than the one that invented the architecture and is currently deploying it to thousands of customers. And, as hybrid models continue to gain traction and SSMs continue to get deployed in other areas, I think Cartesia is uniquely positioned to build models that can instantly understand and generate any modality. And, if my case for why SSMs are interesting isn’t compelling enough, take it from Jensen Huang himself:

If working on today’s version of the transformer sounds exciting, Cartesia is actively hiring across 20 positions, including AI researchers, product engineers, business operations, growth, and designers. The Cartesia team also has a long history of hiring interns, from both undergrad and grad programs.